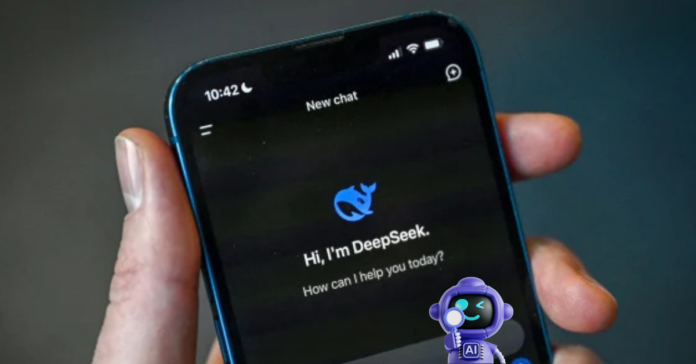

We went viral this week. The chatbot application developed by the Chinese artificial intelligence laboratory DeepSeek became famous all around the globe. Its AI models have made Wall Street analysts, and technologists alike, question whether the United States can stay in the front of the AI race and whether the demand for AI chips will remain constant. DeepSeek became known almost overnight and has taken all the attention of the media worldwide. So where did DeepSeek come from, and how was it possible for it to skyrocket in such a short time?

DeepSeek’s trader origins

High-Flyer Capital Management-an AI-driven quantitative hedge fund from China-is indeed behind DeepSeek. Since AI is his passion, Liang Wenfeng co-founded High-Flyer with other partners back in 2015. Wenfeng is believed to have dabbled in trading ever since his time at Zhejiang University and began with launching a hedge fund with High-Flyer Capital Management in 2019, directed towards developing and bringing AI algorithms into the field.

In 2023, High-Flyer established DeepSeek as a lab that would focus on research into AI tools not connected with its financial business. With a backing from High-Flyer in the list of investors, the lab spouted its wings and became an entity apart-DeepSeek. Developing model training cluster data centers was on and off for DeepSeek from day one. However, like other AI firms in China, DeepSeek is affected by U.S. export bans on hardware. It had to utilize Nvidia H800 chips for training the model that follows because this is a less powerful version of a chip, H100, allowed for U.S. companies.

DeepSeek’s strong models

DeepSeek showcased its first models in November 2023 — DeepSeek Coder, DeepSeek LLM, and DeepSeek Chat. But last spring, with the grand release of the next-gen DeepSeek-V2 family of models, the AI industry started to pay attention. That general-purpose text- and image-analyzing system performed real well on many AI benchmarks — and cost far less to operate than comparable models of the time. This in turn pressured the domestic competition today, including ByteDance and Alibaba, to drop the usage prices for some of their models or really give others away for free. December of 2024 would see DeepSeek V3 added to DeepSeek’s catalog and with it more fame.

DeepSeek, through internal benchmark tests, has alleged that DeepSeek V3 outperforms both the downloadable, open-to-the-world models like Meta’s Llama as well as “closed” models only available through API such as OpenAI’s GPT-4o. DeepSeek’s R1 “reasoning” model is equally noteworthy. DeepSeek claims that R1, released in January, matches up well against OpenAI’s o1 model in key benchmarks. Apart from a reasoning model, R1 has a way of self-checking, that ensures it slips through some of the pitfalls that models usually tumble through.

Reasoning models are, however, generally a bit slower to come up with answers — by seconds to minutes more at the least — when equated to non-reasoning models. The good side of the story is that they are reliable in the areas of physics, science, and math. There is a down side for DeepSeek V3, R1, and all of DeepSeek’s other models, however. They are Chinese-developed AI and thus are expected to be benchmarked by China’s Internet regulator to make sure that the responses “fully embody core socialist values.” In DeepSeek’s chatbot app, for example, R1 is simply told not to answer questions about Tiananmen Square or Taiwan’s autonomy.

A disruptive approach

If DeepSeek’s business model exists, the specific configuration of the model remains in the dark. Either way, the company has priced its products and services drastically below market value, with some being offered complimentary. Not to mention the fact there is no VC investment despite insane amounts of interest. DeepSeek’s explanation is that efficiency gains made it a very low-cost endeavor. Some analysts, however, react with skepticism about the company’s data. Regardless, the developers took to the models of DeepSeek, which aren’t open source, as the phrase is generally understood but are instead made available under permissive licenses, which allow them to be commercially used. “Developers on Hugging Face created over 500 ‘derivative’ models of R1, which collectively have seen 2.5 million downloads,” says Clem Delangue, CEO of Hugging Face, which is one of the platforms hosting DeepSeek’s models.

DeepSeek’s overcoming of larger, more established competitors has generally been described as subverting AI and over-hyped. Some of the company’s success is what led Nvidia to lose 18 percent of its stock price in January and prompted public commentary from OpenAI CEO Sam Altman. DeepSeek is now available on Microsoft Azure AI Foundry, which brings together under a single umbrella AI-related offerings for business. CEO Mark Zuckerberg remarked that AI infrastructure investment on Meta’s earnings call for the first quarter would remain a ‘strategic advantage’ for Meta. In March, OpenAI called DeepSeek “state-subsidized” and “state-controlled” and requested that the U.S. government weigh a ban on models from DeepSeek.

During Nvidia’s fourth-quarter earnings call, CEO Jensen Huang highlighted DeepSeek’s “extraordinary innovations” that make it-and other models of “reasoning”-good business for Nvidia because there is just so much more compute that would be needed for those. While some companies might be banning DeepSeek, entire countries and governments are doing so as well, including South Korea. New York state also banned the use of DeepSeek on government devices. As for whatever future awaits DeepSeek, only time will tell. It would undoubtedly be upgraded. However, there seems to exist a growing unease among the US government about what it believes is a foreign influence that is harmful. In March, The Wall Street Journal reported that the US was likely to ban DeepSeek on government devices.