The Gemma family of open models has been instrumental in proving our commitment to taking steps to provide accessible AI. Last month saw the celebration of Gemma’s first birthday-a milestone characterized by tremendous uptake-100 million-plus downloads-and an active community creating over 60,000 variants of Gemma. This Gemmaverse keeps inspiring us. Today, we introduce Gemma 3, a few lightweight, state-of-the-art open models built using the same research and technology behind our Gemini 2.0 models. These are our most advanced, portable, and responsibly developed open models yet. They are intended to run fast on devices-from phones and laptops to workstations-bringing the power of development for building AI applications wherever users need them. Gemma 3 offers a choice of sizes, ranging from 1B to 27B, allowing users to select the model best suited to the performance requirements of their hardware. In this post, we will explain what Gemma 3 is, introduce ShieldGemma 2, and explain how you can become a part of the ever-expanding Gemmaverse.

New Ways developers can use with Gemma 3

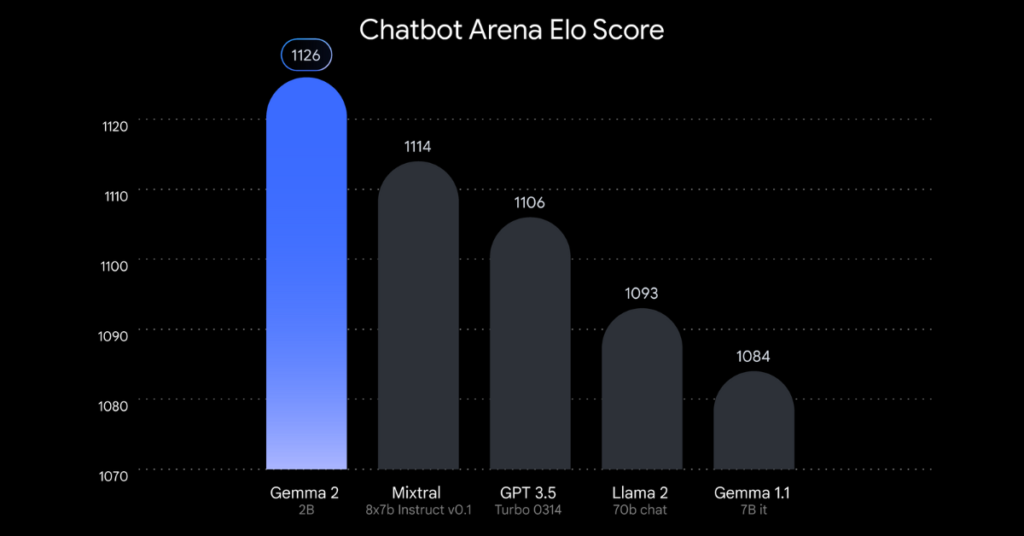

- Powered by the unparalleled single-accelerator model, Gemma 3 outclasses Llama-405B, DeepSeek-V3, and o3-min within pilot human preference evaluations placed in LMArena’s leaderboard. This permits crafting engaging user experiences all within a single GPU or TPU host.

- Go global in 140 languages: This lets one create applications in the language of your customers. Out of the box, Gemma 3 supports over 35 languages and has pretrained support for more than 140 languages.

- Build AI with sophisticated text and visual reasoning: Build applications that evaluate images, text, and short videos with ease, new interactive and intelligent applications open.

- Handle complex tasks with expanded context window: The 128k-token context window that bulks up their apps and feeds the understanding of larger amounts of information.

- Automate tasks and Agentic engagements through function-calling: Function calling into abilities for application state-building agentic experiences with structured output are possibilities with Gemma 3.

- Enabling performance that is even higher than before, more quickly using quantized models: With a quantization, Gemma 3 introduces officially supported alternatives that simultaneously deplete model dimensionality and cut computational use while preserving high accuracy.

Safety protocol indeed is a serious concern when it comes to the building of Gemma 3.

Open models require scrupulous risk assessment, and our approach entails generating a level of testing proportional to that of each model. The main focus during the building of Gemma 3 was data governance, alignment in accordance to our policy for safety via fine-tuning, and robust benchmark evaluation. While the intensive testing of more capable models often informs the assessment of their less capable counterparts, Gemma 3 specifically showed improvements in STEM performance that warranted certain evaluations looking into its ability to do harm through the creation of harmful substances, which after analysis indicate a low potential for risk. Certainly, it is increasingly important that the industry as a whole, along with us, develop risk-proportionate approaches to safety around even more powerful models. Over time, we will continue learning and refining our safety practices for open models.

Built-in safety for image applications with ShieldGemma 2

Coming alongside Gemma 3, we are also launching ShieldGemma 2, a real powerhouse of an image safety checker with 4B built on Gemma 3. ShieldGemma 2 offers an off-the-shelf solution for image safety, returning safety labels into three categories: dangerous content, sexually explicit, and violence. The developers can customize ShieldGemma further to meet whatever safety needs they determine for their users. Open and built to allow for flexibility and control, ShieldGemma 2 takes advantage of the Gemma 3 platform for performance and efficiency to promote responsible AI development.

Ready to integrate with the tools you already use

Gemma 3 and ShieldGemma 2 integrates tightly into your established workflows:

- Code with the tools you love: Supporting the best framework for your project, whether it’s Hugging Face Transformers, Ollama, JAX, Keras, PyTorch, Google AI Edge, UnSloth, vLLM, or Gemma.cpp, something for everyone is there.

- Get going without further ado: Get yourself a fast track to access Gemma 3. Build your way up with it instantly. Right inside Google AI Studio to get that full exposure or download it as a model through Kaggle or Hugging Face.

- Adapt Gemma 3 to suit your needs: The Gemma 3 models are much better than before. A revamped code base includes recipes for efficient fine-tuning and inference. Train and adapt it to the platform of your choice, such as Google Colab, Vertex AI, or your gaming GPU.

- Deploy as you like: Multiple deployment options are available with Gemma 3, including but not limited to Vertex AI, Cloud Run, Google GenAI API, Local environments, and any other platform so you can select what best fits your app and infrastructure.

- Optimize performance on NVIDIA GPUs: Optimizations have been run by NVIDIA on the Gemma 3 models for you to get great performance out of a GPU of any size, from Jetson Nano to the latest Blackwell chips. Gemma 3 is now featured on the NVIDIA API Catalog, enabling rapid prototyping with just an API call.

- Accelerating AI development across many hardware platforms: Gemma 3 is further optimized for Google Cloud TPUs and works with AMD GPUs through the open-source ROCm™ stack. For CPU execution, Gemma.cpp offers a direct solution.

A “Gemmaverse” of models and tools

The Gemmaverse is a huge ecosystem of community-created Gemma models and tools that are bound by the common purpose of driving creativity and innovation. For instance, SEA-LION v3 by AI Singapore connects languages through communication across Southeast Asia; BgGPT by INSAIT becomes the first Bulgarian large language model and demonstrates how Gemma is capable of supporting diverse languages; and OmniAudio by Nexa AI showcases what on-device AI can do by equipping everyday devices with advanced audio processing capability. To further stimulate academic research breakthroughs, we are launching the Gemma 3 Academic Program, through which academic researchers can apply for up to $10,000 in Google Cloud credits to accelerate their research based on Gemma 3 innovations. The application form opens today, and will stay open for the next four weeks.

Get started with Gemma 3

As part of our ongoing commitment to democratizing access to high-quality AI, Gemma 3 represents the next step. Ready to explore Gemma 3? Here’s where to start:

- Instant exploration: Try Gemma 3 at full precision directly in your browser – no setup needed – with Google AI Studio. Get an API key directly from Google AI Studio and use Gemma 3 with the Google GenAI SDK.

- Customize and build: Download Gemma 3 models from Hugging Face, Ollama, or Kaggle. Easily fine-tune and adapt the model to your unique requirements with Hugging Face’s Transformers library or your preferred development environment.

- Deploy and scale: Bring your custom Gemma 3 creations to market at scale with Vertex AI. Run inference on Cloud Run with Ollama. Get started with NVIDIA NIMs in the NVIDIA API Catalog.